Introduction

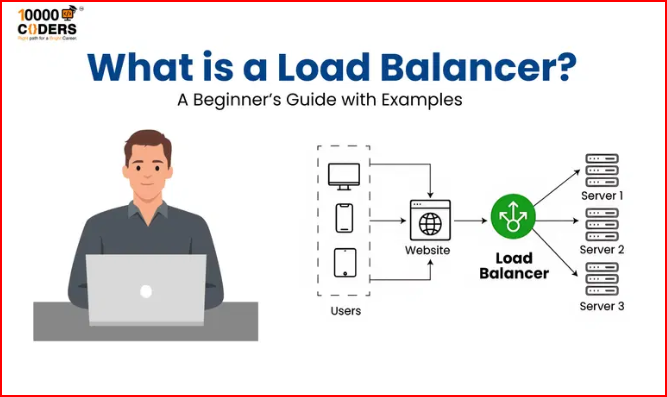

Load balancers are essential components in modern web infrastructure that help distribute incoming network traffic across multiple servers. This guide will explain what load balancers are, why they're important, and how they work, with practical examples to help you understand their implementation.

What is a Load Balancer?

A load balancer acts as a "traffic cop" sitting in front of your servers and routing client requests across all servers capable of fulfilling those requests in a manner that maximizes speed and capacity utilization. This ensures that no single server becomes overwhelmed, which could degrade performance.

Why Do We Need Load Balancers?

- High Availability

- Prevents single points of failure

- Ensures continuous service availability

- Handles server failures gracefully

- Scalability

- Distributes traffic evenly

- Allows horizontal scaling

- Handles increased load efficiently

- Performance

- Reduces server response time

- Optimizes resource utilization

- Improves user experience

Types of Load Balancers

1. Application Load Balancer (Layer 7)

Features:

- Content-based routing

- HTTP/HTTPS traffic handling

- Advanced request routing

- SSL termination

Example Configuration (AWS ALB):

{

"LoadBalancerArn": "arn:aws:elasticloadbalancing:region:account-id:loadbalancer/app/my-load-balancer/1234567890abcdef",

"Listeners": [

{

"Protocol": "HTTP",

"Port": 80,

"DefaultActions": [

{

"Type": "forward",

"TargetGroupArn": "arn:aws:elasticloadbalancing:region:account-id:targetgroup/my-targets/1234567890abcdef"

}

]

}

]

}

2. Network Load Balancer (Layer 4)

Features:

- TCP/UDP traffic handling

- High throughput

- Static IP addresses

- Low latency

Example Configuration (AWS NLB):

{

"LoadBalancerArn": "arn:aws:elasticloadbalancing:region:account-id:loadbalancer/net/my-load-balancer/1234567890abcdef",

"Listeners": [

{

"Protocol": "TCP",

"Port": 80,

"DefaultActions": [

{

"Type": "forward",

"TargetGroupArn": "arn:aws:elasticloadbalancing:region:account-id:targetgroup/my-targets/1234567890abcdef"

}

]

}

]

}

Load Balancing Algorithms

1. Round Robin

class RoundRobinLoadBalancer:

def __init__(self, servers):

self.servers = servers

self.current_index = 0

def get_next_server(self):

server = self.servers[self.current_index]

self.current_index = (self.current_index + 1) % len(self.servers)

return server

2. Least Connections

class LeastConnectionsLoadBalancer:

def __init__(self, servers):

self.servers = {server: 0 for server in servers}

def get_next_server(self):

return min(self.servers.items(), key=lambda x: x[1])[0]

def increment_connections(self, server):

self.servers[server] += 1

def decrement_connections(self, server):

self.servers[server] = max(0, self.servers[server] - 1)

3. IP Hash

class IPHashLoadBalancer:

def __init__(self, servers):

self.servers = servers

def get_next_server(self, client_ip):

hash_value = hash(client_ip)

return self.servers[hash_value % len(self.servers)]

Health Checks

Load balancers perform health checks to ensure servers are functioning properly:

# Example Nginx health check configuration

http {

upstream backend {

server backend1.example.com:8080 max_fails=3 fail_timeout=30s;

server backend2.example.com:8080 max_fails=3 fail_timeout=30s;

}

server {

listen 80;

location /health {

health_check interval=5s fails=3 passes=2;

}

}

}

Session Persistence

Maintaining user sessions across multiple servers:

# Example Nginx sticky session configuration

upstream backend {

ip_hash; # Sticky sessions based on IP

server backend1.example.com:8080;

server backend2.example.com:8080;

}

SSL Termination

Handling HTTPS traffic:

# Example Nginx SSL termination

server {

listen 443 ssl;

server_name example.com;

ssl_certificate /path/to/cert.pem;

ssl_certificate_key /path/to/key.pem;

location / {

proxy_pass http://backend;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

}

}

Load Balancer Deployment Patterns

1. Active-Passive

# Example HAProxy active-passive configuration

global

maxconn 4096

user haproxy

group haproxy

frontend http-in

bind *:80

default_backend servers

backend servers

server server1 192.168.1.10:80 check

server server2 192.168.1.11:80 check backup

2. Active-Active

# Example HAProxy active-active configuration

frontend http-in

bind *:80

default_backend servers

backend servers

balance roundrobin

server server1 192.168.1.10:80 check

server server2 192.168.1.11:80 check

Best Practices

- Security

- Implement SSL/TLS

- Use WAF (Web Application Firewall)

- Enable DDoS protection

- Regular security updates

- Monitoring

- Track server health

- Monitor response times

- Set up alerts

- Log analysis

- Scaling

- Auto-scaling groups

- Dynamic server addition

- Capacity planning

- Performance testing

Common Use Cases

- Web Applications

- High-traffic websites

- E-commerce platforms

- Content delivery

- API services

- Microservices

- Service discovery

- API gateway

- Service mesh

- Container orchestration

- Database Systems

- Read replicas

- Sharding

- Failover

- Backup systems

Conclusion

Load balancers are crucial components in modern web infrastructure that help ensure high availability, scalability, and performance. By understanding the different types of load balancers, algorithms, and best practices, you can design robust and efficient systems that can handle high traffic loads while maintaining reliability.

Key Takeaways

- Load balancers distribute traffic across multiple servers

- Different types serve different purposes (Layer 7 vs Layer 4)

- Various algorithms for different use cases

- Health checks ensure server reliability

- Security and monitoring are essential

- Proper configuration is crucial for performance

🚀 Ready to kickstart your tech career?

Comments